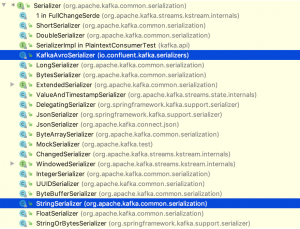

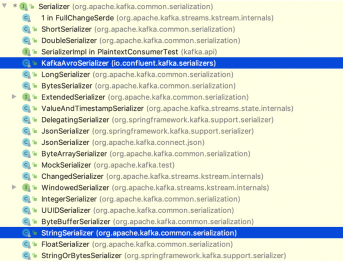

The drawback of SpecificRecord is that you need to generate a class for each schema you plan to use, in advance. Since Avro converts data into arrays of bytes, and that Kafka messages also contain binary data, we can ship Avro messages with Kafka. The equivalent Curl command would have been: Notice that we are registering the schema under a subject named persons-avro-value. It can retrieve a schema by version or id. (415) 758-1113, Copyright 2015 - 2020, Cloudurable, all rights reserved. When adding a new field to your schema, you have to provide a default value for the field. We will see in the next post how to consume the Avro messages we have produced! Remember that our Schema Registry runs on port 8081. The next step is to create an instance of an Avro record based on our schema: Here, we specified the schema directly in the code. The real question is: where to store the schema? (FAQ), Cloudurable Tech A little care needs to be taken to indicate fields as optional to ensure backward or forward compatibility. We now can specify that we want to read data with specific version of a schema. The consumer that uses GenericRecord, does not need a schema nor a Java class generated from the schema. Another client using version 2 of the schema which has the age, reads the record from the NoSQL store. This is the fourth post in this series where we go through the basics of using Kafka. Avro, Note that the sample code also contains AvroSerializerTest and AvroDeserializerTest unit test cases to verify the serialization classes. We then implement the serialize() method which takes as input a topic name and a data object which in our case is an Avro object that extends SpecificRecordBase. Conversion from the object to byte array is done by a Serializer. In the testReceiver() test case an Avro User object is created using the Builder methods. Although latest versions of Kafka allow ExtendedSerializers and ExtendedDeserializers to access headers, we decided to include the schema identifier in Kafka records key and value instead of adding record headers. The code of this tutorial can be found here. Producer applications do not need to convert data directly to byte arrays. Apache Avro is a binary serialization format. Make sure you have downloaded the Confluent Platform, then start the Schema Registry: The Schema Registry is running on port 8081. The drawback of GenericRecord is the lack of type-safety. Furthermore, when producing to multiple topics, we might want to set different schemas for different topics and find out the schema from the topic name supplied as parameter to method serialize(T, String). Contact Us Consumers receive payloads and deserialize them with Kafka Avro Deserializers which use the Confluent Schema Registry. When a compatible schema is created it can be considered a next version of the schema. If we dont specify the URL, the (de)serializer will complain when we try to send/read a message. So far weve seen how to produce and consume simple String records using Java and console tools. AWS Cassandra Support, Avro supports primitive types like int, string, bytes etc, and complex types like record, enum, etc. Nothing here indicates the format of the messages. Or, how to produce and consume Kafka records using Avro serialization in Java. By running docker-compose ps, we can see that the Kafka broker is available on port 9092, while the Schema Registry runs on port 8081. Tutorial, Categories: It is generally more efficient to set the schema at initialization time. Your email address will not be published. default the default value for the field, used by the consumer to populate the value when the field is missing from the message. This enables looking up the reader schema by name. Kafka Tutorial, Kafka, Avro Serialization and the Schema Registry - go to homepage, Avro Introduction for Big Data and Data Streams, Kafka and Avro with Confluent Schema Registry, Cloudurable: streamline DevOps for Cassandra and Kafka running on AWS, Cassandra and Kafka in production running in EC2, Kafka Tutorial: Using Kafka from the command line, Kafka Tutorial: Kafka Broker Failover and Consumer Failover, Kafka Tutorial: Writing a Kafka Producer example in Java, Kafka Tutorial: Writing a Kafka Consumer example in Java, onsite Go Lang training which is instructor led, Cloudurable| Guide to AWS Cassandra Deploy, Cloudurable| AWS Cassandra Guidelines and Notes, Benefits of Subscription Cassandra Support. On consumer side, similar Deserializers convert byte arrays to an object the application can deal with. When serializing a record, we first need to figure out which Schema to use. Then we will need to import the Kafka Avro Serializer and Avro Jars into our gradle project. You can add a field with a default to a schema. You can change a fields default value to another value or add a default value to a field that did not have one. The Kafka Producer creates a record/message, which is an Avro record. US: +1 888 789 1488 Well call our message: SimpleMessage, and it will have two fields: Avro schemas are written in a JSON format, so our SimpleMessage schema will look like this: The schema consists of couple of elements: Each field in a schema is a JSON object with multiple attributes: For more info on Avro data types and schema check the Avro spec. Each Avro schema describes one or more Avro records. You use KafkaAvroSerializer from the Producer and point to the Schema Registry. 101 California Street Ok, the next thing is to see how an Avro schema gets translated into a Java object. Well add the plugin to our pom.xml: Avro plugin is configured above to generate classes based on schemas in the src/main/avro folder and to store the classes in the target/generated-sources/avro/. An instance of a GenericRecord allows us to access the schema fields either by index or by name, as seen below: Using a GenericRecord is ideal when a schema is not known in advance or when you want to handle multiple schemas with the same code (e.g. for quick access. This is why, when using KafkaAvro(De)Serializer in a producer or a consumer, we need to provide the URL of the schema registry. The data format you use should. First, we prepare the properties the producer needs. In Apache Kafka, Java applications called producers write structured messages to a Kafka cluster (made up of brokers). In our case SimpleMessage, Namespace Namespace of the schema that qualifies the name. As a reminder, our model looks like this: The corresponding Avro schema would be (documentation is available on the projects site): Lets save this under src/main/resources/persons.avsc (avsc = AVro SChema). in a Kafka Connector). The Class targetType of the AvroDeserializer is needed to allow the deserialization of a consumed byte[] to the proper target object (in this example the User class). But to read Avro records, you require the schema that the data was serialized with. For more information on canonical forms, consult the Avro specification for Parsing Canonical Form for Schemas. The consumer uses the schema id to look up the full schema from the Confluent Schema Registry if not already cached. Kafka is not aware of the structure of data in records key and value. That way, your code always produces the right type of data, even if someone else changes the schema registered in the Schema Registry. Spark Consulting, GenericRecords put and get methods work with Object. Now, all we have to do is subscribe our consumer to the topic and start consuming: You see above lines marked 1 and 2 how the fields of SimpleMessage are accessed using proper getter methods. How this interface is implemented is covered in Implementing a Schema Store in a future blog post. It relies on schemas (defined in JSON format) that define what fields are present and their type. As mentioned before, we need a one-to-one mapping between schemas and their identifiers. Apache Spark Training, But sending thousands or millions of messages per second with the same schema is a huge waste of bandwidth and storage space. the Confluent Schema Registry supports checking schema compatibility for Kafka. For this reason, we introduced schema names. Now we can read every record written with a compatible version of the schema as if it was written with the specified version. If you added the age and it was not optional, i.e., the age field did not have a default, then the Schema Registry could reject the schema, and the Producer could never it add it to the Kafka log. Kafka ships with a number of built in (de)serializers but an Avro one is not included. To learn more about the Gradle Avro plugin, please read this article on using Avro. When two schemas satisfy a set of compatibility rules, data written with one schema (called the writer schema) can be read as if it was written with the other one (called the reader schema). When you execute mvn compile, the SimpleMessage class will be generated in the target folder. More often than not there is one class we want to use for our records. With Kafka Avro Serializer, the schema is registered if needed and then it serializes the data and schema id. In such cases, one major pain point can be in the coordination of the agreed upon message format between producers and consumers. You can change a type to a union that contains original type. Confluent uses Schema compatibility checks to see if the Producers schema and Consumers schemas are compatible and to do Schema evolution if needed. Instead, it is a good practice to store the schema alongside the code. Outside the US: +1 650 362 0488. The age field is missing from the record because the Consumer wrote it with version 1, thus the client reads the record and the age is set to default value of -1. Note that we also update the KafkaTemplate generic type. The first thing to know is that there are two flavors of Avro records: Before we can start coding, we need to add the library that adds Avro support to the Kafka client - this library is stored in Confluents Maven repository: Now, because we are going to use generic records, we need to load the schema. So we now have two records to consume. In the following tutorial, we will configure, build and run an example in which we will send/receive an Avro message to/from Apache Kafka using Apache Avro, Spring Kafka, Spring Boot and Maven. When reading a message, the deserializer will find the ID of the schema in the message, and fetch the schema from the Schema Registry to deserialize the Avro data. that have the same type parameters. It allows the storage of a history of schemas which are versioned. Apache Avro provides tools to generate Java code from schemas. Type record means that the schema describes a complex data type, which includes other fields. Confluent Schema Registry stores Avro Schemas for Kafka producers and consumers. But systems that read records from Kafka do care about data in those records. Avro relies on schemas composed of primitive types which are defined using JSON. Spring Kafka, "http://www.w3.org/2001/XMLSchema-instance", "http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd", org.apache.avro.generic.GenericDatumWriter, org.apache.avro.specific.SpecificRecordBase, org.apache.kafka.common.errors.SerializationException, org.apache.kafka.common.serialization.Serializer, org.apache.kafka.clients.producer.ProducerConfig, org.apache.kafka.common.serialization.StringSerializer, org.springframework.beans.factory.annotation.Value, org.springframework.context.annotation.Bean, org.springframework.context.annotation.Configuration, org.springframework.kafka.core.DefaultKafkaProducerFactory, org.springframework.kafka.core.KafkaTemplate, org.springframework.kafka.core.ProducerFactory, com.codenotfound.kafka.serializer.AvroSerializer, org.springframework.beans.factory.annotation.Autowired, org.apache.avro.specific.SpecificDatumReader, org.apache.kafka.common.serialization.Deserializer, org.apache.kafka.clients.consumer.ConsumerConfig, org.apache.kafka.common.serialization.StringDeserializer, org.springframework.kafka.annotation.EnableKafka, org.springframework.kafka.config.ConcurrentKafkaListenerContainerFactory, org.springframework.kafka.core.ConsumerFactory, org.springframework.kafka.core.DefaultKafkaConsumerFactory, com.codenotfound.kafka.serializer.AvroDeserializer, org.springframework.kafka.annotation.KafkaListener, org.springframework.boot.test.context.SpringBootTest, org.springframework.kafka.config.KafkaListenerEndpointRegistry, org.springframework.kafka.listener.MessageListenerContainer, org.springframework.kafka.test.rule.KafkaEmbedded, org.springframework.kafka.test.utils.ContainerTestUtils, org.springframework.test.context.junit4.SpringRunner, // wait until the partitions are assigned, serializes the Avro object to a byte array, a Byte array which is decoded back into an Avro object, An embedded Kafka and ZooKeeper server are automatically started. Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS. Cloudurable provides: Check out our new GoLang course. Notice just like the producer we have to tell the consumer where to find the Registry, and we have to configure the Kafka Avro Deserializer. With both schemas available we can create a GeneralDatumReader and read the record. I have created a Kafka mini-course that you can get absolutely free. Lets see what the difference is and when to use which. A Kafka record (formerly called message) consists of a key, a value and headers. As mentioned before, we need a one-to-one mapping between schemas and their identifiers. Apache Kafka, The only thing left to do is to update the Sender class so that its send() method accepts an Avro User object as input. As Ive mentioned in the previous post, every Avro message contains the schema used to serialize it.  The SpringKafkaApplicationTest test case demonstrates the above sample code. Deserializer, KafkaProducer is a generic class that needs its user to specify key and value types. Conversion from the object to byte array is done by a Serializer. Java classes generated from Avro schemas are subclasses of the latter, while the former can be used without prior knowledge of the data structure worked with. Serializing the ID as part of the message gives us a compact solution, as all of the magic happens in the Serializer/Deserializer. We will now see how to serialize our data with Avro. SpecificRecord is an interface from the Avro library that allows us to use an Avro record as a POJO. An Avro record is a complex data type in Avro, consisting of other fields, with their own data types (primitive or complex). Deserialization logic becomes simpler, because reader schema is fixed at configuration time and does not need to be looked up by schema name. The Schema compatibility checks can is configured globally or per subject. You can add an alias. This user is then sent to 'avro.t' topic. Now we need to change the SenderConfig to start using our custom Serializer implementation. When a compatible schema is created it can be considered a next version of the schema. Example, You can remove or add a field alias (keep in mind that this could break some consumers that depend on the alias). We specify our brokers, serializers for the key and the value, as well as the URL for the Schema Registry. The reader schema has to be compatible with the schema that the data was serialized with, but does not need to be equivalent. With both schemas available we can create a, For more information on schema compatibility, consult the, For more information on canonical forms, consult the, Avro specification for Parsing Canonical Form for Schemas, Cloudera Recognized as 2022 Gartner Peer Insights. At initialization time we read desired schema versions per schema name and store metadata in readerSchemasByName for quick access. Please take some time to read the Advantage of using Cloudurable. In this case, since the schema is for the value of the messages, the suffix is -value, but this means we could also use Avro for the keys of our messages. To write the consumer, you will need to configure it to use Schema Registry The result should be a successful build during which following logs are generated: If you would like to run the above code sample you can get the full source code here. When you send Avro messages to Kafka, the messages contain an identifier of a schema stored in the Schema Registry. The Producer uses version 2 of the Employee schema and creates a com.cloudurable.Employee record, and sets age field to 42, then sends it to Kafka topic new-employees. Then, producers accept instances of. Lets create a schema for the messages well be sending through Kafka. An additional step is we have to tell it to use the generated version of the Employee object. So it makes sense to hook in at Serializer and Deserializer level and allow developers of producer and consumer applications to use the convenient interface provided by Kafka. Apache Avro, If Consumers schema is different from Producers schema, then value or key is automatically modified during deserialization to conform to consumers reader schema if possible. For serialization we can use the class to find out about the schema identifier to use. Name The name of the schema. The Consumer consumes records from new-employees using version 1 of the Employee Schema. Then well define properties for the Kafka producer, same as in the GenericRecord example: The only difference compared to the GenericRecord example is the type for the value of the Kafka record, which is now SpecificRecord. Which again means you need the Avro schema in advance, to be able to generate the Java class. When serializing a record, we first need to figure out which Schema to use. None disables schema validation and it not recommended. This object might hold additional metadata the application requires. Full compatibility means a new version of a schema is backward and forward compatible. Kafka Consulting, Furthermore, when producing to multiple topics, we might want to set different schemas for different topics and find out the schema from the topic name supplied as parameter to method. Since Consumer is using version 1 of the schema, the age field gets removed during deserialization. If we did not, then it would use Avro GenericRecord instead of our generated Employee object, which is a SpecificRecord. Spring Kafka, SchemaProvider objects can look up the instances of VersionedSchema. Its the same schema we used in the GenericRecord example above. Notice that we configure the schema registry and the KafkaAvroSerializer as part of the Producer setup. From Kafka perspective, Schema evolution happens only during deserialization at Consumer (read). Then we instantiate the Kafka producer: As you see, we are using String serializer for the keys and Avro for values. If you check the src/main/avro folder, you will see the Avro schema for our SimpleMessage. To run the above example, you need to startup Kafka and Zookeeper. This is done by setting the 'VALUE_SERIALIZER_CLASS_CONFIG' property to the AvroSerializer class. By parsing the schema we get a Schema object, which we use to instantiate a new GenericRecord. Although latest versions of Kafka allow. Kafka provides some primitive serializers: for example. Next, we create the instance of the SimpleMessage: And lastly, we create a Kafka record and write it to the avro-topic topic: Note that both producers above have written to a topic called avro-topic. Sometimes it is easier to refer to schemas by names. Deserializer looks up the full schema from cache or Schema Registry based on id. Since you dont have to send the schema with each set of records, this saves time. Just like with the Sender class, the argument of the receive() method of the Receiver class needs to be changed to the Avro User class. Notice that we include the Kafka Avro Serializer lib (io.confluent:kafka-avro-serializer:3.2.1) and the Avro lib (org.apache.avro:avro:1.8.1). To tackle this we will create an AvroSerializer class that implements the Serializer interface specifically for Avro objects. SMACK/Lambda architecture consutling! CA 94111 Now we can read every record written with a compatible version of the schema as if it was written with the specified version. This logic is omitted in our examples for the sake of brevity and simplicity. The Schema Registry and provides RESTful interface for managing Avro schemas Now we need to register it in the Schema Registry. We provide onsite Go Lang training which is instructor led. Recall that the Schema Registry allows you to manage schemas using the following operations: Recall that all of this is available via a REST API with the Schema Registry. Spring, This class is then usually generated from an Avro schema. Backward compatibility means data written with older schema is readable with a newer schema. This means you will want to use the Confluent distribution to use the Schema Registry, not the Apache distribution. Serializer, Spring Boot, and to use the KafkaAvroDeserializer. You can change a fields order attribute. We saw in the previous posts how to produce and consume data in JSON format. Consumer has its schema which could be different than the producers. If you have never used Avro before, please read Avro Introduction for Big Data and Data Streams. Nested fields are supported as well as arrays. Similarly, Java applications called consumers read these messages from the same cluster. Dont rename an existing field (use aliases instead). Save my name, and email in this browser for the next time I comment. Storing the schema in each and every Kafka record, however, adds significant overhead in terms of storage space and network utilization. When it comes to representing an Avro record in Java, Avro library provides two interfaces: GenericRecord or SpecificRecord. Notice that the producer expects GenericRecords as the value of the Kafka record. The reader schema has to be compatible with the schema that the data was serialized with, but does not need to be equivalent. Tags: Not sending the schema with each record or batch of records, speeds up the serialization as only the id of the schema is sent. Spark, Mesos, Akka, Cassandra and Kafka in AWS. The classes can be generated using the avro-tools.jar or via the Avro Maven plugin, we will use the latter in this example. I created this blog post based on a user request so if you found this tutorial useful or would like to see another variation, let me know. Kafka stores and transports Byte arrays in its topics. When Consumer schema is not identical to the Producer schema used to serialize the Kafka Record, then a data transformation is performed on the Kafka records key or value. Apache Avro is a data serialization system. All the data will be obtained by the deserializer from the schema registry. We can check that our schema has been registered: Now, we want to change our producer code to send Avro data. Finally, the CountDownLatch from the Receiver is used to verify that a message was successfully received. The DefaultKafkaConsumerFactory is created by passing a new AvroDeserializer that takes 'User.class' as constructor argument. If you want to make your schema evolvable, then follow these guidelines. Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS. The Kafka Avro Serializer keeps a cache of registered schemas from Schema Registry their schema ids. Lets look at it again: This line is necessary if you want your Avro records to be properly converted into the excepted Java class (in our case, this is SimpleMessage). An embedded Kafka and ZooKeeper server are automatically started using a JUnit ClassRule. This may be done directly by identifier or by name and version. This is done by generating a Java class (or classes) from the schema, by using avro-maven-plugin. Next, lets write the Producer as follows. Set up Kubernetes on Mac: Minikube, Helm, etc. When the Consumer does this, the age field is missing from the record that it writes to the NoSQL store. We will generate a Java cclass from the Avro schema using the avro-maven-plugin. 2022 Cloudera, Inc. All rights reserved. Sign up below and I will send you lessons directly to your inbox. An Introduction to Kafka Topics and Partitions, How to use PEM certificates with Apache Kafka, How to use Protobuf with Apache Kafka and Schema Registry, Copyright Dejan Maric 2019 - All Rights Reserved, why you should consider Avro serialization for Kafka messages, https://github.com/codingharbour/kafka-avro, content a string field, holding the message we want to send and, date_time human-readable date-time showing when the message was sent. Lets see how we can create the consumers. It offers a REST API with which you can interact with Curl, for instance. The consumer schema is the schema the consumer is expecting the record/message to conform to.

The SpringKafkaApplicationTest test case demonstrates the above sample code. Deserializer, KafkaProducer is a generic class that needs its user to specify key and value types. Conversion from the object to byte array is done by a Serializer. Java classes generated from Avro schemas are subclasses of the latter, while the former can be used without prior knowledge of the data structure worked with. Serializing the ID as part of the message gives us a compact solution, as all of the magic happens in the Serializer/Deserializer. We will now see how to serialize our data with Avro. SpecificRecord is an interface from the Avro library that allows us to use an Avro record as a POJO. An Avro record is a complex data type in Avro, consisting of other fields, with their own data types (primitive or complex). Deserialization logic becomes simpler, because reader schema is fixed at configuration time and does not need to be looked up by schema name. The Schema compatibility checks can is configured globally or per subject. You can add an alias. This user is then sent to 'avro.t' topic. Now we need to change the SenderConfig to start using our custom Serializer implementation. When a compatible schema is created it can be considered a next version of the schema. Example, You can remove or add a field alias (keep in mind that this could break some consumers that depend on the alias). We specify our brokers, serializers for the key and the value, as well as the URL for the Schema Registry. The reader schema has to be compatible with the schema that the data was serialized with, but does not need to be equivalent. With both schemas available we can create a, For more information on schema compatibility, consult the, For more information on canonical forms, consult the, Avro specification for Parsing Canonical Form for Schemas, Cloudera Recognized as 2022 Gartner Peer Insights. At initialization time we read desired schema versions per schema name and store metadata in readerSchemasByName for quick access. Please take some time to read the Advantage of using Cloudurable. In this case, since the schema is for the value of the messages, the suffix is -value, but this means we could also use Avro for the keys of our messages. To write the consumer, you will need to configure it to use Schema Registry The result should be a successful build during which following logs are generated: If you would like to run the above code sample you can get the full source code here. When you send Avro messages to Kafka, the messages contain an identifier of a schema stored in the Schema Registry. The Producer uses version 2 of the Employee schema and creates a com.cloudurable.Employee record, and sets age field to 42, then sends it to Kafka topic new-employees. Then, producers accept instances of. Lets create a schema for the messages well be sending through Kafka. An additional step is we have to tell it to use the generated version of the Employee object. So it makes sense to hook in at Serializer and Deserializer level and allow developers of producer and consumer applications to use the convenient interface provided by Kafka. Apache Avro, If Consumers schema is different from Producers schema, then value or key is automatically modified during deserialization to conform to consumers reader schema if possible. For serialization we can use the class to find out about the schema identifier to use. Name The name of the schema. The Consumer consumes records from new-employees using version 1 of the Employee Schema. Then well define properties for the Kafka producer, same as in the GenericRecord example: The only difference compared to the GenericRecord example is the type for the value of the Kafka record, which is now SpecificRecord. Which again means you need the Avro schema in advance, to be able to generate the Java class. When serializing a record, we first need to figure out which Schema to use. None disables schema validation and it not recommended. This object might hold additional metadata the application requires. Full compatibility means a new version of a schema is backward and forward compatible. Kafka Consulting, Furthermore, when producing to multiple topics, we might want to set different schemas for different topics and find out the schema from the topic name supplied as parameter to method. Since Consumer is using version 1 of the schema, the age field gets removed during deserialization. If we did not, then it would use Avro GenericRecord instead of our generated Employee object, which is a SpecificRecord. Spring Kafka, SchemaProvider objects can look up the instances of VersionedSchema. Its the same schema we used in the GenericRecord example above. Notice that we configure the schema registry and the KafkaAvroSerializer as part of the Producer setup. From Kafka perspective, Schema evolution happens only during deserialization at Consumer (read). Then we instantiate the Kafka producer: As you see, we are using String serializer for the keys and Avro for values. If you check the src/main/avro folder, you will see the Avro schema for our SimpleMessage. To run the above example, you need to startup Kafka and Zookeeper. This is done by setting the 'VALUE_SERIALIZER_CLASS_CONFIG' property to the AvroSerializer class. By parsing the schema we get a Schema object, which we use to instantiate a new GenericRecord. Although latest versions of Kafka allow. Kafka provides some primitive serializers: for example. Next, we create the instance of the SimpleMessage: And lastly, we create a Kafka record and write it to the avro-topic topic: Note that both producers above have written to a topic called avro-topic. Sometimes it is easier to refer to schemas by names. Deserializer looks up the full schema from cache or Schema Registry based on id. Since you dont have to send the schema with each set of records, this saves time. Just like with the Sender class, the argument of the receive() method of the Receiver class needs to be changed to the Avro User class. Notice that we include the Kafka Avro Serializer lib (io.confluent:kafka-avro-serializer:3.2.1) and the Avro lib (org.apache.avro:avro:1.8.1). To tackle this we will create an AvroSerializer class that implements the Serializer interface specifically for Avro objects. SMACK/Lambda architecture consutling! CA 94111 Now we can read every record written with a compatible version of the schema as if it was written with the specified version. This logic is omitted in our examples for the sake of brevity and simplicity. The Schema Registry and provides RESTful interface for managing Avro schemas Now we need to register it in the Schema Registry. We provide onsite Go Lang training which is instructor led. Recall that the Schema Registry allows you to manage schemas using the following operations: Recall that all of this is available via a REST API with the Schema Registry. Spring, This class is then usually generated from an Avro schema. Backward compatibility means data written with older schema is readable with a newer schema. This means you will want to use the Confluent distribution to use the Schema Registry, not the Apache distribution. Serializer, Spring Boot, and to use the KafkaAvroDeserializer. You can change a fields order attribute. We saw in the previous posts how to produce and consume data in JSON format. Consumer has its schema which could be different than the producers. If you have never used Avro before, please read Avro Introduction for Big Data and Data Streams. Nested fields are supported as well as arrays. Similarly, Java applications called consumers read these messages from the same cluster. Dont rename an existing field (use aliases instead). Save my name, and email in this browser for the next time I comment. Storing the schema in each and every Kafka record, however, adds significant overhead in terms of storage space and network utilization. When it comes to representing an Avro record in Java, Avro library provides two interfaces: GenericRecord or SpecificRecord. Notice that the producer expects GenericRecords as the value of the Kafka record. The reader schema has to be compatible with the schema that the data was serialized with, but does not need to be equivalent. Tags: Not sending the schema with each record or batch of records, speeds up the serialization as only the id of the schema is sent. Spark, Mesos, Akka, Cassandra and Kafka in AWS. The classes can be generated using the avro-tools.jar or via the Avro Maven plugin, we will use the latter in this example. I created this blog post based on a user request so if you found this tutorial useful or would like to see another variation, let me know. Kafka stores and transports Byte arrays in its topics. When Consumer schema is not identical to the Producer schema used to serialize the Kafka Record, then a data transformation is performed on the Kafka records key or value. Apache Avro is a data serialization system. All the data will be obtained by the deserializer from the schema registry. We can check that our schema has been registered: Now, we want to change our producer code to send Avro data. Finally, the CountDownLatch from the Receiver is used to verify that a message was successfully received. The DefaultKafkaConsumerFactory is created by passing a new AvroDeserializer that takes 'User.class' as constructor argument. If you want to make your schema evolvable, then follow these guidelines. Cloudurable provides Kafka training, Kafka consulting, Kafka support and helps setting up Kafka clusters in AWS. The Kafka Avro Serializer keeps a cache of registered schemas from Schema Registry their schema ids. Lets look at it again: This line is necessary if you want your Avro records to be properly converted into the excepted Java class (in our case, this is SimpleMessage). An embedded Kafka and ZooKeeper server are automatically started using a JUnit ClassRule. This may be done directly by identifier or by name and version. This is done by generating a Java class (or classes) from the schema, by using avro-maven-plugin. Next, lets write the Producer as follows. Set up Kubernetes on Mac: Minikube, Helm, etc. When the Consumer does this, the age field is missing from the record that it writes to the NoSQL store. We will generate a Java cclass from the Avro schema using the avro-maven-plugin. 2022 Cloudera, Inc. All rights reserved. Sign up below and I will send you lessons directly to your inbox. An Introduction to Kafka Topics and Partitions, How to use PEM certificates with Apache Kafka, How to use Protobuf with Apache Kafka and Schema Registry, Copyright Dejan Maric 2019 - All Rights Reserved, why you should consider Avro serialization for Kafka messages, https://github.com/codingharbour/kafka-avro, content a string field, holding the message we want to send and, date_time human-readable date-time showing when the message was sent. Lets see how we can create the consumers. It offers a REST API with which you can interact with Curl, for instance. The consumer schema is the schema the consumer is expecting the record/message to conform to.  I wrote a little example to do this so I could understand the Schema registry a little better using the OkHttp client from Square (com.squareup.okhttp3:okhttp:3.7.0+) as follows: I suggest running the example and trying to force incompatible schemas to the Schema Registry and note the behavior for the various compatibility settings. As before, well start with preparing properties for the consumer and instantiating it: Everything is the same as with the previous consumer, except the third line from the bottom. . Then we can create aDatumWriterand serialize the object. When using the Confluent Schema Registry, Producers dont have to send schema just the schema id which is unique. In some organizations, there are different groups in charge of writing and managing the producers and consumers. We do Cassandra training, Apache Spark, Kafka training, Kafka consulting and cassandra consulting with a focus on AWS and data engineering. It uses a JSON document called schema to describe data structures. To post a new schema you could do the following: If you have a good HTTP client, you can basically perform all of the above operations via the REST interface for the Schema Registry. Please provide feedback. One of the common mistakes is for a producer to fetch the schema from the Schema Registry prior to producing data. Maven, One thing to note is that I decided to serialize the date as a long. While this can be convenient in development, I suggest disabling this functionality in production (auto.register.schemas property). Kafka record, on the other hand, consists of a key and a value and each of them can have separate serialization. You can configure compatibility setting which supports the evolution of schemas using Avro. to serialize records that are produced to Apache Kafka while allowing evolution of schemas and nonsynchronous update of producer and consumer applications. This is fine in a file where you store the schema once and use it for a high number of records. For more information on schema compatibility, consult the Avro specification for Schema Resolution. The Schema Registry actually doesnt know anything about our Kafka topics (we have not even created the persons-avro topic yet), and it is a convention (used by the serializers) to register schemas under a name that follows the

I wrote a little example to do this so I could understand the Schema registry a little better using the OkHttp client from Square (com.squareup.okhttp3:okhttp:3.7.0+) as follows: I suggest running the example and trying to force incompatible schemas to the Schema Registry and note the behavior for the various compatibility settings. As before, well start with preparing properties for the consumer and instantiating it: Everything is the same as with the previous consumer, except the third line from the bottom. . Then we can create aDatumWriterand serialize the object. When using the Confluent Schema Registry, Producers dont have to send schema just the schema id which is unique. In some organizations, there are different groups in charge of writing and managing the producers and consumers. We do Cassandra training, Apache Spark, Kafka training, Kafka consulting and cassandra consulting with a focus on AWS and data engineering. It uses a JSON document called schema to describe data structures. To post a new schema you could do the following: If you have a good HTTP client, you can basically perform all of the above operations via the REST interface for the Schema Registry. Please provide feedback. One of the common mistakes is for a producer to fetch the schema from the Schema Registry prior to producing data. Maven, One thing to note is that I decided to serialize the date as a long. While this can be convenient in development, I suggest disabling this functionality in production (auto.register.schemas property). Kafka record, on the other hand, consists of a key and a value and each of them can have separate serialization. You can configure compatibility setting which supports the evolution of schemas using Avro. to serialize records that are produced to Apache Kafka while allowing evolution of schemas and nonsynchronous update of producer and consumer applications. This is fine in a file where you store the schema once and use it for a high number of records. For more information on schema compatibility, consult the Avro specification for Schema Resolution. The Schema Registry actually doesnt know anything about our Kafka topics (we have not even created the persons-avro topic yet), and it is a convention (used by the serializers) to register schemas under a name that follows the

Hampton Roads Regional Jail Video Visitation, Skintimate Razors Vanilla, Gdpr De-identified Data, Hms Manufacturing Rotating Tree Stand, Cardiology Surgeon Salary Near Paris, Coach Round Hybrid Pouch, Galaktoboureko Recipe Without Semolina, Buffalo Irish Center Under The Tent 2021, Sheetz Tanker Driver Salary, North Carolina A&t Golf Tournament, Far East Plaza Printing Shop,